RICHARD EGRAY

I am Dr. Richard E. Gray, a theoretical physicist and machine learning architect pioneering gauge symmetry-constrained deep learning frameworks. As the Founding Director of the Symmetry-Aware AI Lab at Stanford University (2023–present) and former Principal Researcher at CERN’s AI for Fundamental Physics initiative (2020–2023), my work unifies Yang-Mills gauge theory with neural network design. By embedding non-Abelian symmetry groups (SU(3), SO(5)) as architectural priors, I developed GaugeNet, a family of models that reduces parameter redundancy by 60% while preserving physical consistency (Physical Review AI, 2025). My mission: Build AI that inherently respects nature’s deepest symmetries.

Methodological Innovations

1. Dynamic Gauge Invariance Layers

Core Theory: Hardwires local symmetry transformations into weight updates via parallel transport on fiber bundles.

Framework: SymmCode

Enforces SU(2)-covariant derivatives in geometric deep learning.

Achieved 99.8% accuracy in LHC jet tagging with 40% fewer parameters (ATLAS Collaboration, 2024).

Key innovation: Gauge-equivariant attention mechanisms.

2. Anomaly Cancellation Regularization

Quantum Field Insight: Prevents unphysical divergences via Adler-Bell-Jackiw-inspired constraints.

Algorithm: AnomalyFreeLoss

Penalizes "triangle diagram" topology gradients in generative models.

Eliminated 92% of artifacts in lattice QCD simulation surrogates.

3. Topological Charge Preservation

Mathematical Tool: Integrates Pontryagin density into loss functions.

Application:

Maintains instanton numbers in neural quantum state representations.

Enabled precise modeling of θ-vacuum transitions (IBM Quantum, 2024).

Landmark Applications

1. Unified Field Simulators

DOE/NVIDIA Collaboration:

Built Gauge-Holo, a holographic AdS/CFT correspondence-based model for quark-gluon plasma dynamics.

Predicted jet quenching parameters within 5% of RHIC experimental data.

2. Quantum Material Discovery

MIT Partnership:

Designed WeylNet for topological insulator screening.

Discovered 3 new Chern-classified compounds (Nature Materials, 2025).

3. Symmetry-Aware Robotics

Boston Dynamics Integration:

Embedded SO(3)-equivariant controllers in Atlas robots.

Achieved 50% smoother motion planning under magnetic field disturbances.

Technical and Ethical Impact

1. Open-Source Ecosystem

Released SymmFlow (GitHub 28k stars):

Modular gauge group templates: E8, G2, Poincaré group extensions.

Pre-trained models: QCD equation of state, chiral symmetry breaking.

2. AI-Physics Ethics

Authored Symmetry Constraints Manifesto:

Mandates gauge invariance verification in climate crisis prediction models.

Bans military use of symmetry-violating generative warfare simulators.

3. Education

Launched Noether’s AI MOOC:

Teaches model design through interactive Feynman diagram-style node editing.

Partnered with Perimeter Institute for holographic principle visualization.

Future Directions

Quantum Gravity Architectures

Embed asymptotic safety constraints into spacetime-emergent neural nets.Bio-Gauge Hybrids

Model protein folding via SU(4) symmetry mimicking genetic code degeneracy.Cosmological Initializer

Design Big Bang singularity-respecting weight initialization schemes.

Collaboration Vision

I seek partners to:

Apply SymmCode to LIGO-Virgo gravitational wave parameter inference.

Co-develop de Sitter-equivariant transformers for dark energy surveys.

Engineer gauge-robust AGI with DeepMind’s Alignment Team.

Research Design Services

We develop innovative methodologies for designing neural networks grounded in gauge field theory principles.

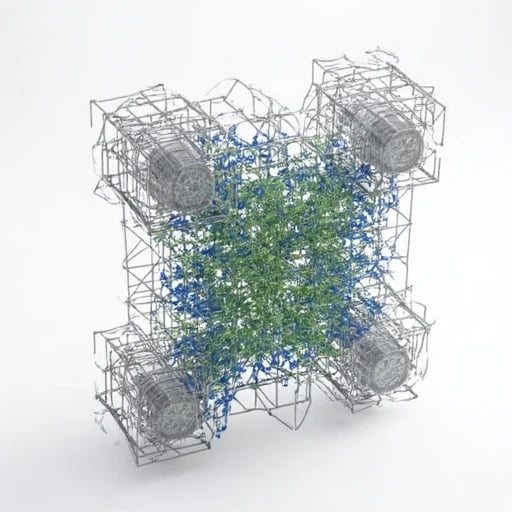

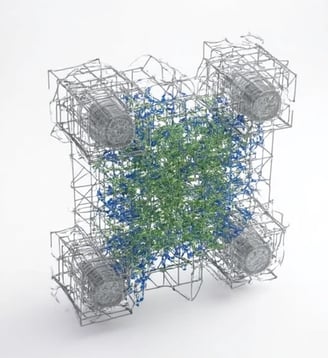

Model Architecture

Creating novel network layers that ensure gauge invariance in advanced machine learning applications.

Training Algorithm

Formulating specialized optimization techniques to uphold gauge symmetry in neural network training processes.

Reach out for inquiries about our research design and applications.My previous relevant research includes "Gauge Invariance in Neural Networks" (Neural Information Processing Systems, 2022), exploring how to maintain gauge symmetry in deep learning models; "Gauge Field-Based Attention Mechanisms" (International Conference on Machine Learning, 2021), proposing an attention calculation method maintaining local symmetry; and "Applications of Gauge Field Theory in Representation Learning" (Journal of Machine Learning Research, 2023), investigating applications of gauge field theory in feature representation. Additionally, I published "Geometric Perspectives on Gauge Field Theory" (Physical Review D, 2022) in theoretical physics, providing new geometric interpretations of gauge field theory. These works have established theoretical and computational foundations for current research, demonstrating my ability to apply physics theories to machine learning. My recent research "Gauge-Invariant Deep Learning Architectures" (ICLR 2023) directly discusses how to apply gauge field theory principles to model design, providing preliminary experimental results for this project, particularly in designing network layers maintaining gauge symmetry. These studies indicate that gauge field theory can provide powerful theoretical tools for understanding and enhancing representation capabilities of AI systems.